|

SOP: A Scalable Online Post-Training System for Vision-Language-Action Models

Mingjie Pan, Siyuan Feng, Qinglin Zhang, Xinchen Li, Jianheng Song, Chendi Qu, Yi Wang, Chuankang Li, Ziyu Xiong, Zhi Chen, Yi Liu, Jianlan Luo

ArXiv

Paper

|

|

Unified Embodied VLM Reasoning with Robotic Action via Autoregressive Discretized Pre-training

Yi Liu, Sukai Wang, Dafeng Wei, Xiaowei Cai, Linqing Zhong, Jiange Yang, Guanghui Ren, Jinyu Zhang, Maoqing Yao, Chuankang Li, Xindong He, Liliang Chen, Jianlan Luo

ArXiv

Paper

|

|

Act2Goal:

From World Model to General Goal-conditioned Policy

Pengfei Zhou, Liliang Chen, Shengcong Chen, Di Chen,

Wenzhi Zhao, Rongjun Jin, Guanghui Ren Jianlan Luo

ArXiv

Paper

|

|

Precise and Dexterous Robotic Manipulation via Human-in-the-Loop Reinforcement Learning

Jianlan Luo, Charles Xu, Jeffrey Wu, Sergey Levine

Science Robotics 2025

Paper |

Code |

UC Berkeley News |

Tech Xplore

|

|

Reflective Planning: Vision-Language Models for Multi-Stage Long-Horizon Robotic Manipulation

Yunhai Feng, Jiaming Han, Zhuoran Yang, Xiangyu Yue, Sergey Levine, Jianlan Luo

Conference on Robot Learning (CoRL) 2025

Paper |

Code

|

|

RLDG: Robotic Generalist Policy Distillation via Reinforcement Learning

Charles Xu, Qiyang Li, Jianlan Luo, Sergey Levine

Robotics: Science and Systems (RSS) 2025

Paper |

Code

|

|

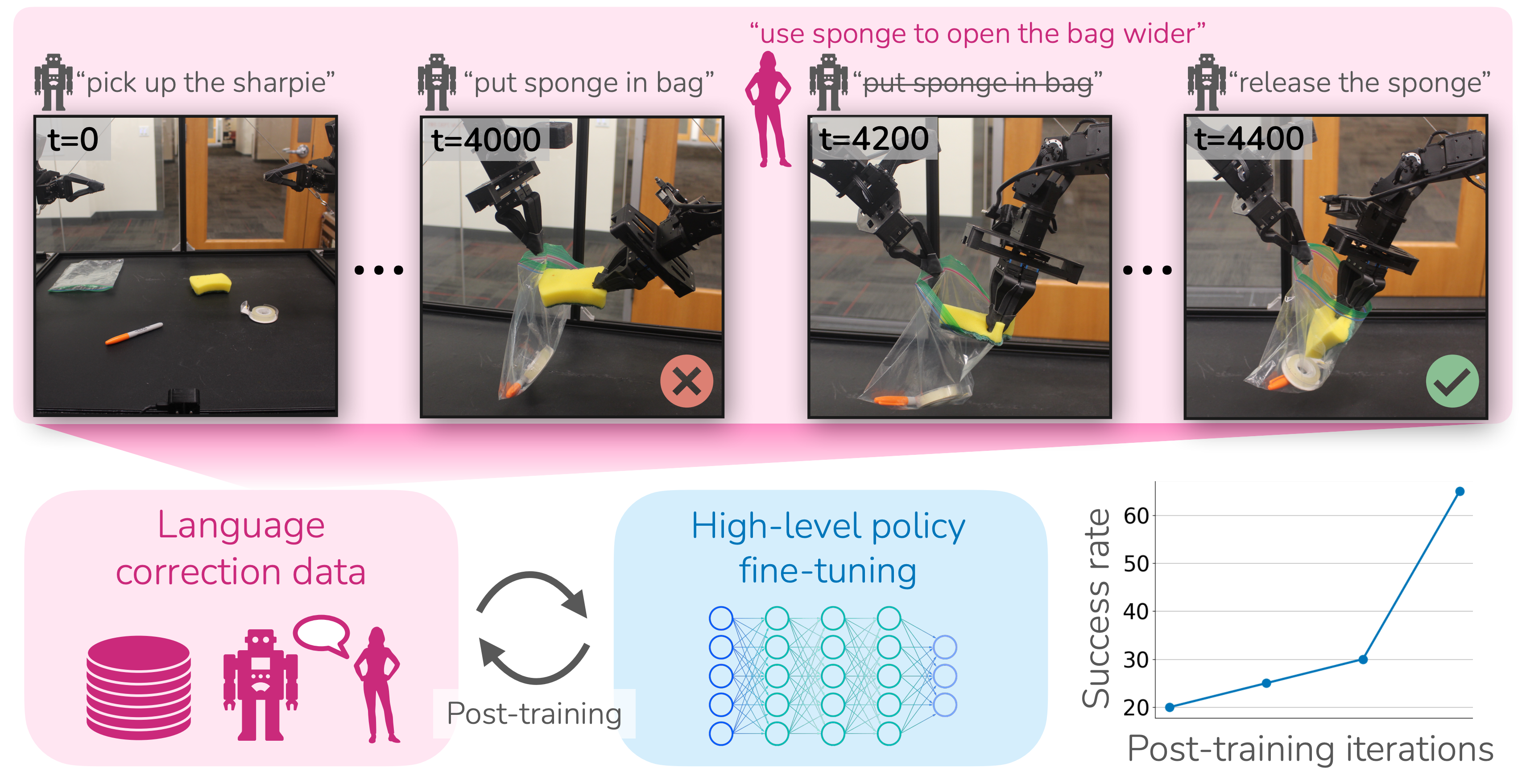

Yell At Your Robot: Improving On-the-Fly from Language Corrections

Lucy Xiaoyang Shi, Zheyuan Hu, Tony Z. Zhao, Archit Sharma, Karl Pertsch, Jianlan Luo, Sergey Levine, Chelsea Finn

Robotics: Science and Systems (RSS) 2024

Paper |

Code

|

|

Octo: An Open-Source Generalist Robot Policy

Octo Model Team

Robotics: Science and Systems (RSS) 2024

Paper |

Code

|

|

SERL: A Software Suite for Sample-Efficient Robotic Reinforcement Learning

Jianlan Luo*,

Zheyuan Hu*,

Charles Xu,

Siri Gadipudi,

Archit Sharma,

Rehaan Ahmad,

Stefan Schaal,

Chelsea Finn,

Abhishek Gupta,

Sergey Levine

International Conference on Robotics and Automation (ICRA) 2024

arXiv |

Video |

Code |

Media Coverage

|

|

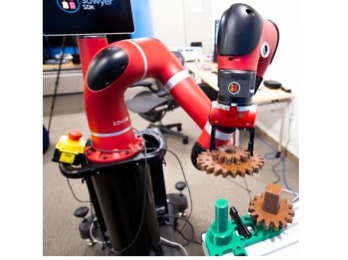

FMB: A Functional Manipulation Benchmark for Generalizable Robotic Learning

Jianlan Luo*,

Charles Xu*,

Fangchen Liu,

Liam Tan,

Zipeng Lin,

Jeffrey Wu,

Pieter Abbeel,

Sergey Levine

International Journal of Robotics Research (IJRR) 2024

arXiv |

IJRR Version |

Video |

Data

|

|

RLIF: Interactive Imitation Learning as Reinforcement Learning

Jianlan Luo*,

Perry Dong*,

Yuexiang Zhai,

Yi Ma,

Sergey Levine

International Conference on Learning Representations (ICLR) 2024

arXiv |

Video |

Code |

Media Coverage 1,

2,

3,

4

|

|

Open X-Embodiment: Robotic Learning Datasets and RT-X Models

Open X-Embodiment Collaboration

International Conference on Robotics and Automation (ICRA) 2024

Best Conference Paper Award

arXiv |

Blog Post |

Dataset

|

|

Multi-Stage Cable Routing through Hierarchical Imitation Learning

Jianlan Luo*,

Charles Xu*,

Xinyang Geng,

Gilbert Feng,

Kuan Fang,

Liam Tan,

Stefan Schaal,

Sergey Levine

IEEE Transactions on Robotics (T-RO) 2024

arXiv |

T-RO version |

Video |

Code |

Dataset |

Data in tfds

|

|

Action-Quantized Offline Reinforcement Learning for Robotic Skill Learning

Jianlan Luo,

Perry Dong,

Jeffrey Wu,

Aviral Kumar,

Xinyang Geng,

Sergey Levine

Conference on Robot Learning (CoRL) 2023

arXiv |

Code

|

|

Offline Meta-Reinforcement Learning for Industrial Insertion

Tony Z. Zhao*,

Jianlan Luo*,

Oleg Sushkov,

Rugile Pevceviciute,

Nicolas Heess,

Jon Scholz,

Stefan Schaal,

Sergey Levine

International Conference on Robotics and Automation (ICRA) 2022

arXiv |

Video |

Media Coverage

|

|

Robust Multi-Modal Policies for Industrial Assembly via Reinforcement Learning and Demonstrations: A Large-Scale Study

Jianlan Luo*,

Oleg Sushkov*,

Rugile Pevceviciute*,

Wenzhao Lian,

Chang Su,

Mel Vecerik,

Stefan Schaal,

Jon Scholz

Robotics: Science and Systems (RSS) 2021

arXiv |

Video |

Media Coverage

|

|

Reinforcement Learning on Variable Impedance Controller for High-Precision Robotic Assembly

Jianlan Luo,

Eugen Solowjow,

Chengtao Wen,

Juan Aparicio Ojea,

Alice M Agogino,

Aviv Tamar,

Pieter Abbeel

International Conference on Robotics and Automation (ICRA) 2019

arXiv |

Video |

Media Coverage

|